Geometric perception

(part 1)

MIT 6.4210/2:

Robotic Manipulation

Fall 2022, Lecture 5

Follow live at https://slides.com/d/3C55OuI/live

(or later at https://slides.com/russtedrake/fall22-lec05)

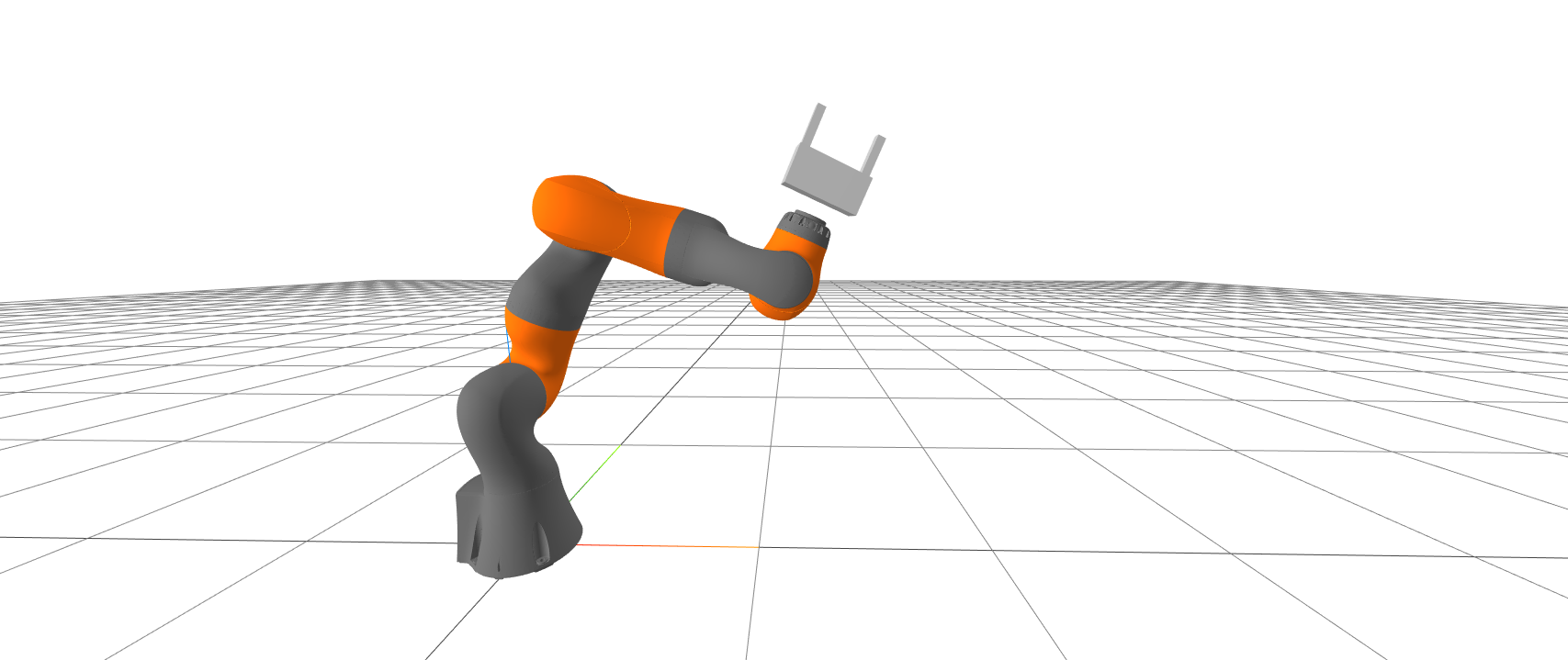

Basic Pick and Place

So far, we have assumed a "perception oracle" that could tell us \({}^WX^O\).

Stop using "cheat ports"

Use the cameras instead!

Pick and Place with Perception

Geometry sensors -- lidar / time of flight

Velodyne spinning lidar

Hokoyu scanning lidar

Luminar

(500m range)

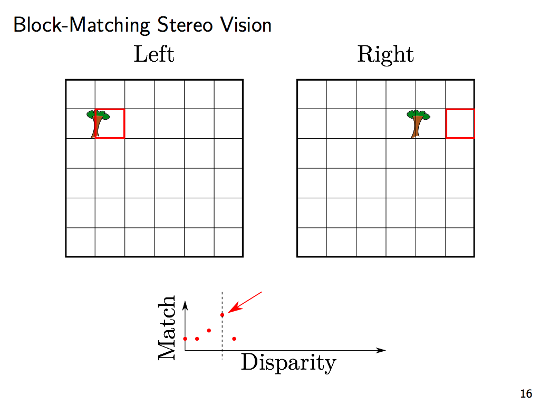

Geometry sensors -- stereo

Carnegie multisense stereo

Point Grey Bumblebee

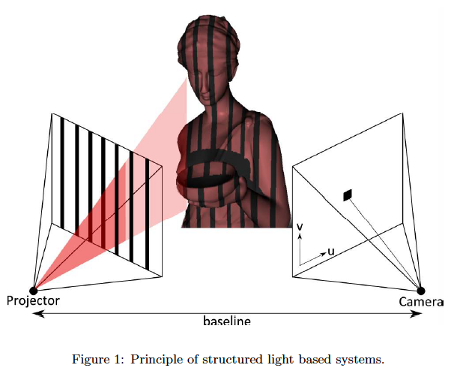

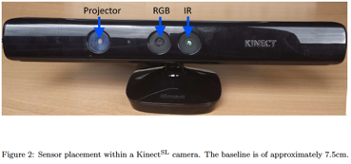

Geometry sensors -- structured light

Microsoft Kinect

Asus Xtion

https://arxiv.org/abs/1505.05459

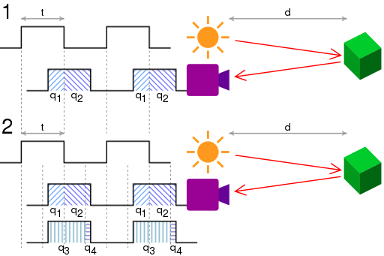

Geometry sensors -- time of flight

Microsoft Kinect v2

Samsung Galaxy DepthVision

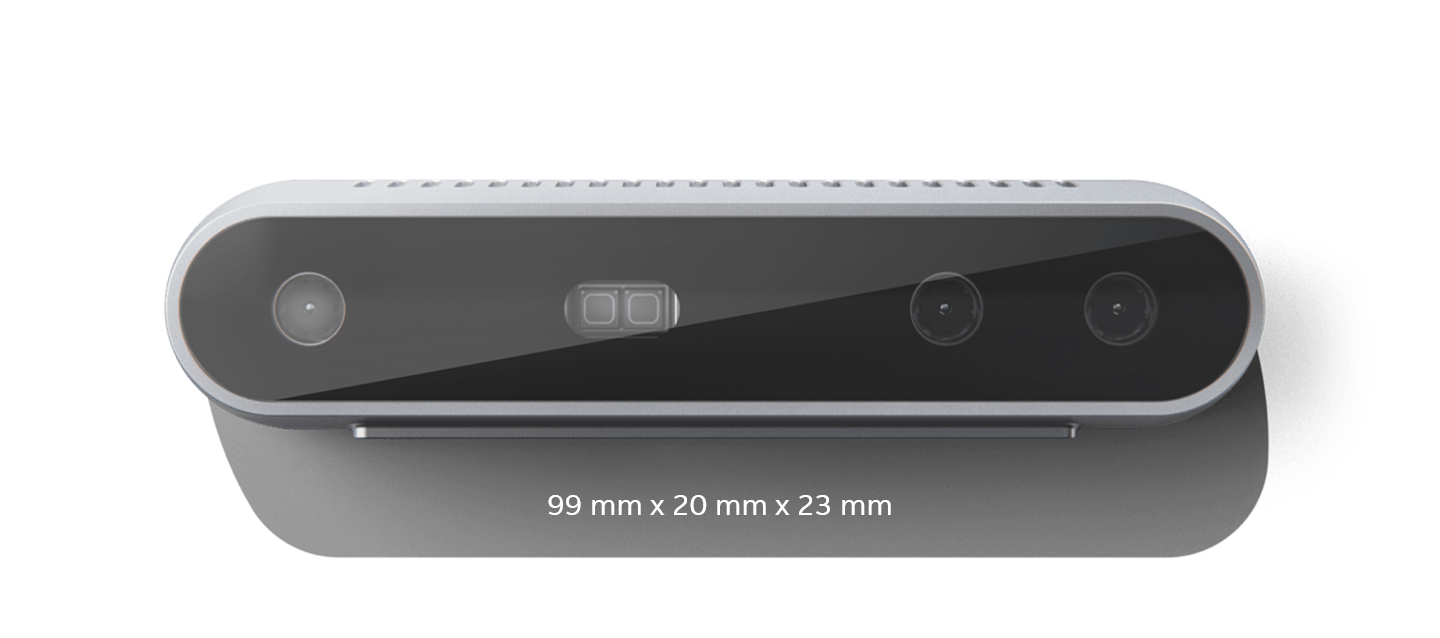

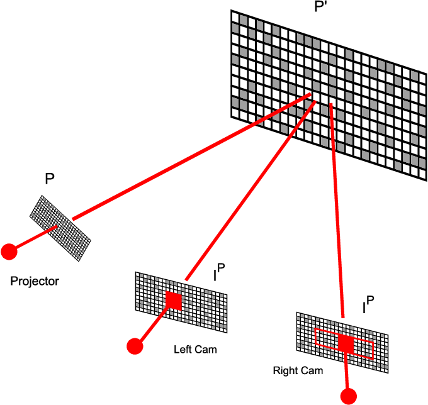

Geometry sensors -- projected texture stereo

Intel Realsense D415

Our pick for the "Manipulation Station"

Major advantage over e.g. ToF: multiple cameras don't interfere with each other.

(also iPhone TrueDepth)

from the docs: "Each pixel in the output image from depth_image is a 16bit unsigned short in millimeters."

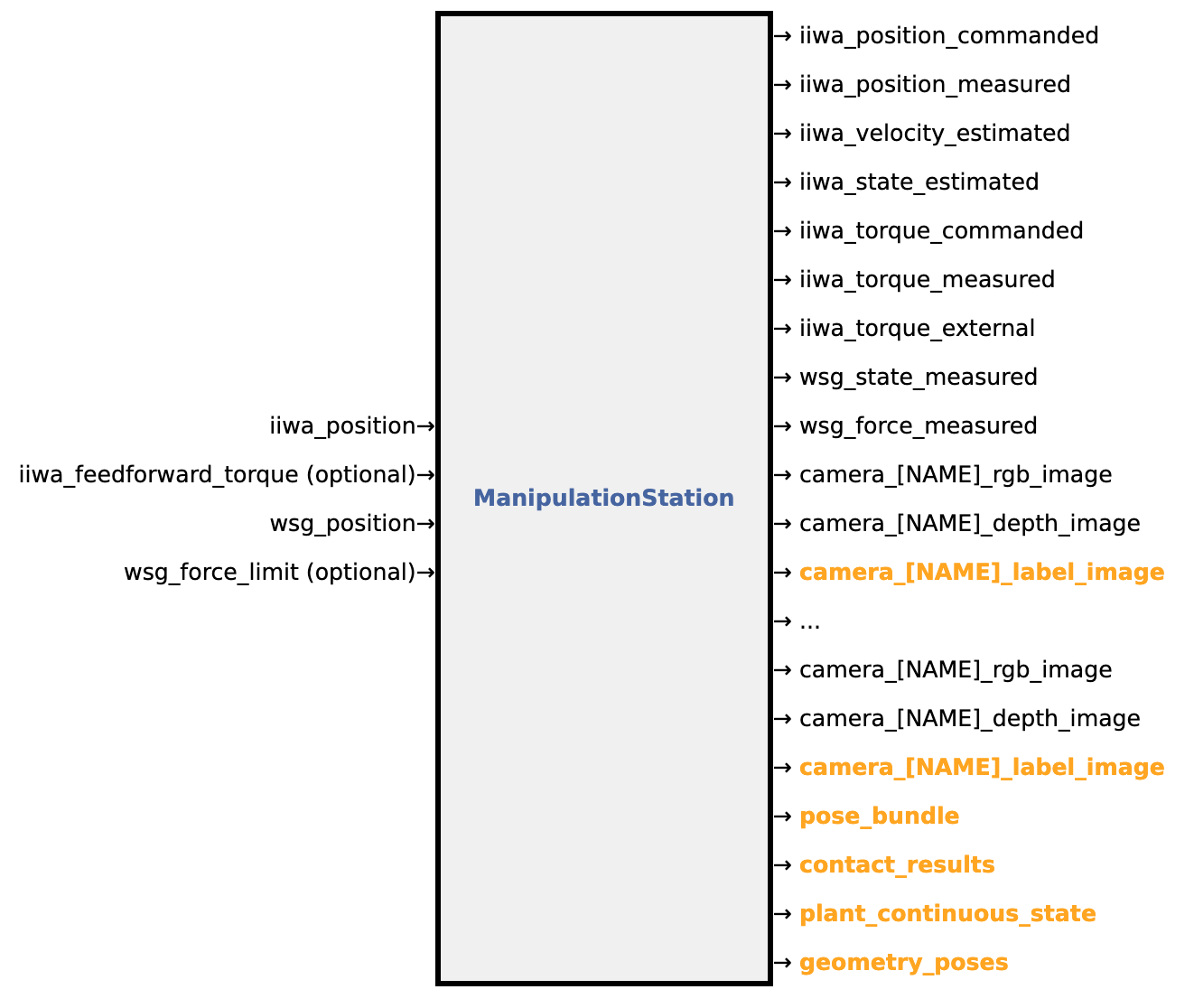

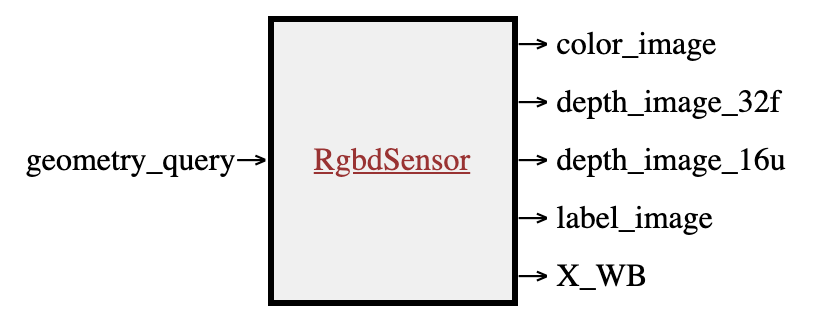

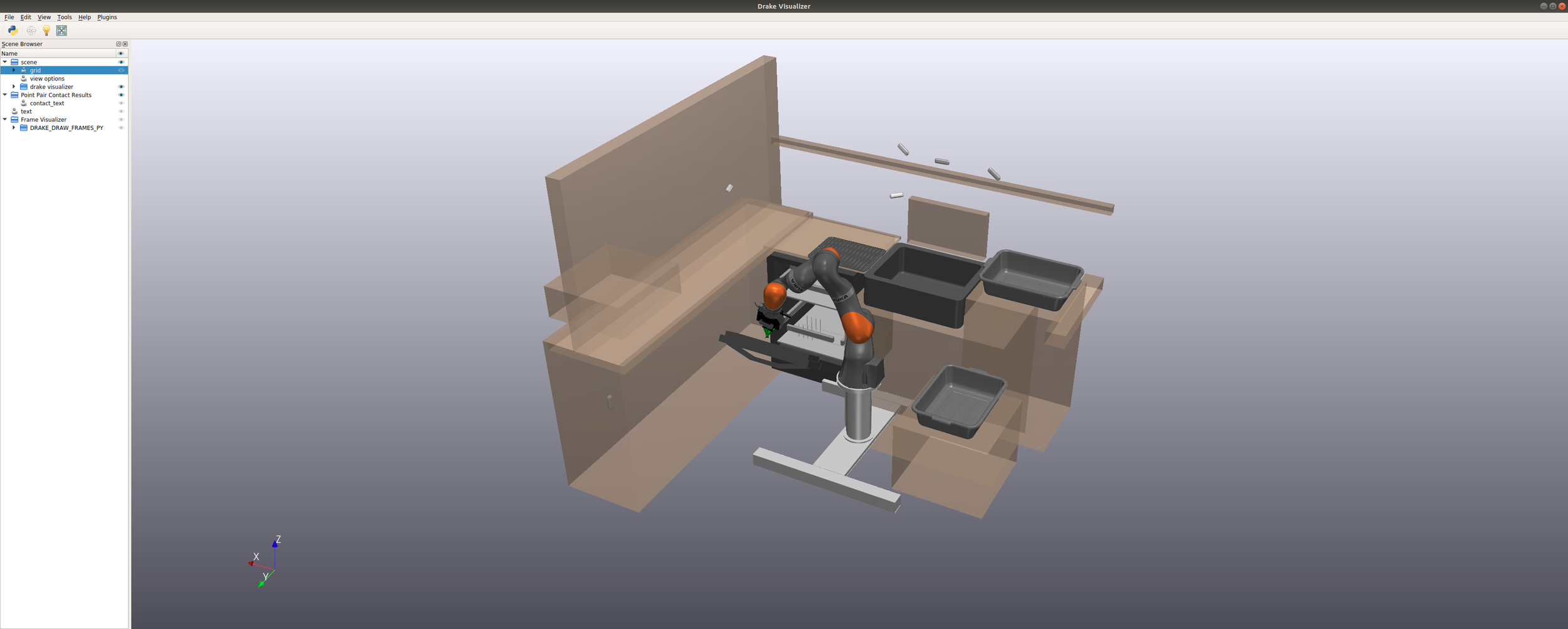

MultibodyPlant

SceneGraph

RgbdSensor

DepthImageToPointCloud

MeshcatVisualizer

MeshcatPointCloudVisualizer

two on wrist

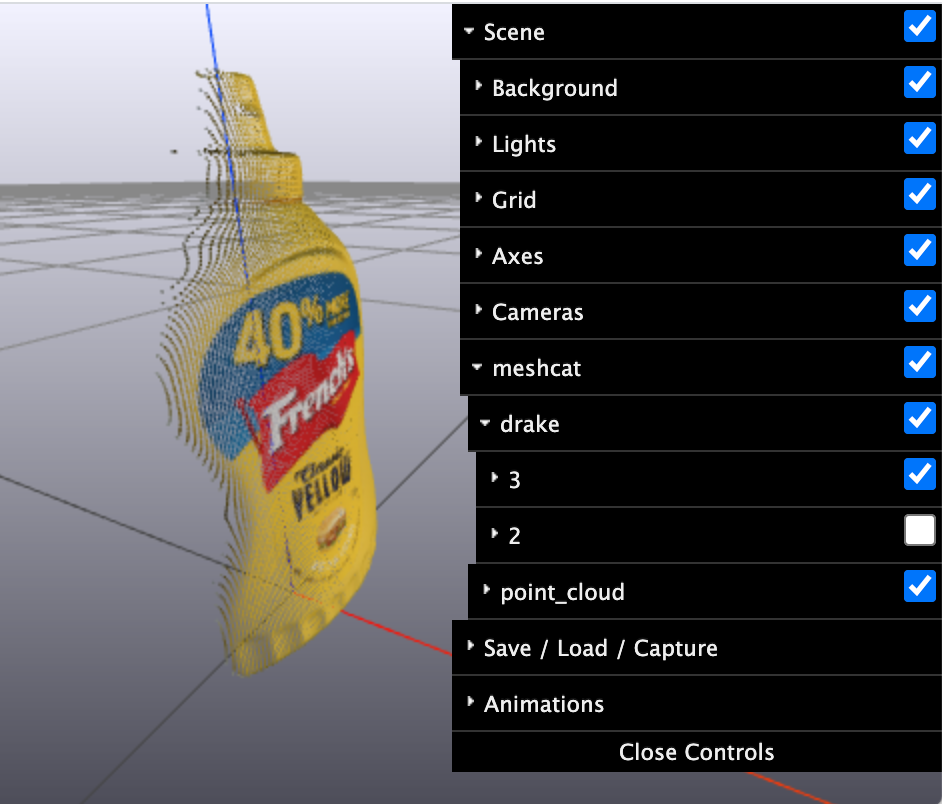

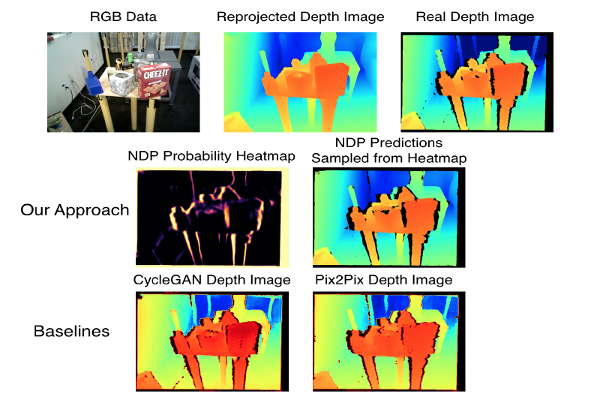

Real point clouds are also messy

figure from Chris Sweeney et al. ICRA 2019.

to the board...