UI Testing

Best Practices

Stefano Magni (@NoriSte)

Front-end Developer for

Organised by:

Hosted by:

I'm Stefano Magni (@NoriSte)

I work for Conio, a bitcoin startup based in Milan.

You can find me on Twitter, GitHub, LinkedIn or StackOverflow.

I'm on the Milano Frontend channel on Slack too.

Slides info

Words with different colors are links 🔗

best practices are highlighted

Slides info

Don't take notes or make photos... These slides are publicly available and I'll publish them on Meetup right after the talk 🎉

https://slides.com/noriste/milano-frontend-ui-testing-best-practices#/

or

https://bit.ly/2UttyKE

Slides info

I tried to keep the important stuff at the top of the slides because... the bottom part isn't visible from the rear 😅

Slides info

Some examples are based on Cypress (and the Testing Library plugin) but this talk won't be about Cypress itself.

A few concepts come from React, but don't worry if you don't know it pretty much.

Disclaimer

The best practices come from:

-

my own experience in Conio

-

my previous (bad) experience with E2E testing

-

a lot of resources and courses I studied to improve my testing skills

What we test with UI testing:

-

👁 visual regressions

-

⌨️ interaction flows

-

🔁 client/server communication

First of all, some testing rules:

🏎 Tests must be as fast as possible: they ideally should be run on every CTRL+S.

It's all about our (and deploy) time. Slow tests will be more eligible to be discarded.

🚜 Tests must be reliable: we all hate false negatives and double checks.

Unreliable tests are the worst ones.

Sooner or later we will discard them if they aren't reliable.

🚲 Tests must be resilient: they must require the fewest amount of maintenance.

Tests are useful to avoid the cognitive load of remembering what we did months ago. They must be a useful tool, not another tool to be maintained.

⛑ Tests must be meticulous: the more a test asserts, the less time we spend finding what went wrong.

... Without exaggerating...

Well... Avoiding test overkill is hard to learn, that's why I want to share my experience with you 😊

Well, a lot of times UI tests are:

🏎 slow

🚜 unrealiable

🚲 to be maintained frequently

⛑ not balanced in term of assertions

But we could improve them! Let's see a list of

Best practices

Test types

We split UI testing in three kind of tests:

-

💅 Component tests

-

🚀 UI integration tests (front-end tests)

-

🌎 E2E tests (~ back-end tests)

💅 Component tests +

🚀 UI integration tests +

🌎 E2E tests =

🎉🎉🎉 High confidence

⚛️

⚛️ ⚛️ ⚛️

⚛️ ⚛️ ⚛️

Standard testing classification:

Unit testing:

Integration testing:

⚛️ ⚛️ ⚛️

⚛️ ⚛️ ⚛️

⚛️

UI testing classification:

Component testing:

UI integration testing:

💅 With component tests we test a single component aspect/contract:

- its generated markup (snapshot testing)

- its visual aspect (regression testing)

- its external callback contracts

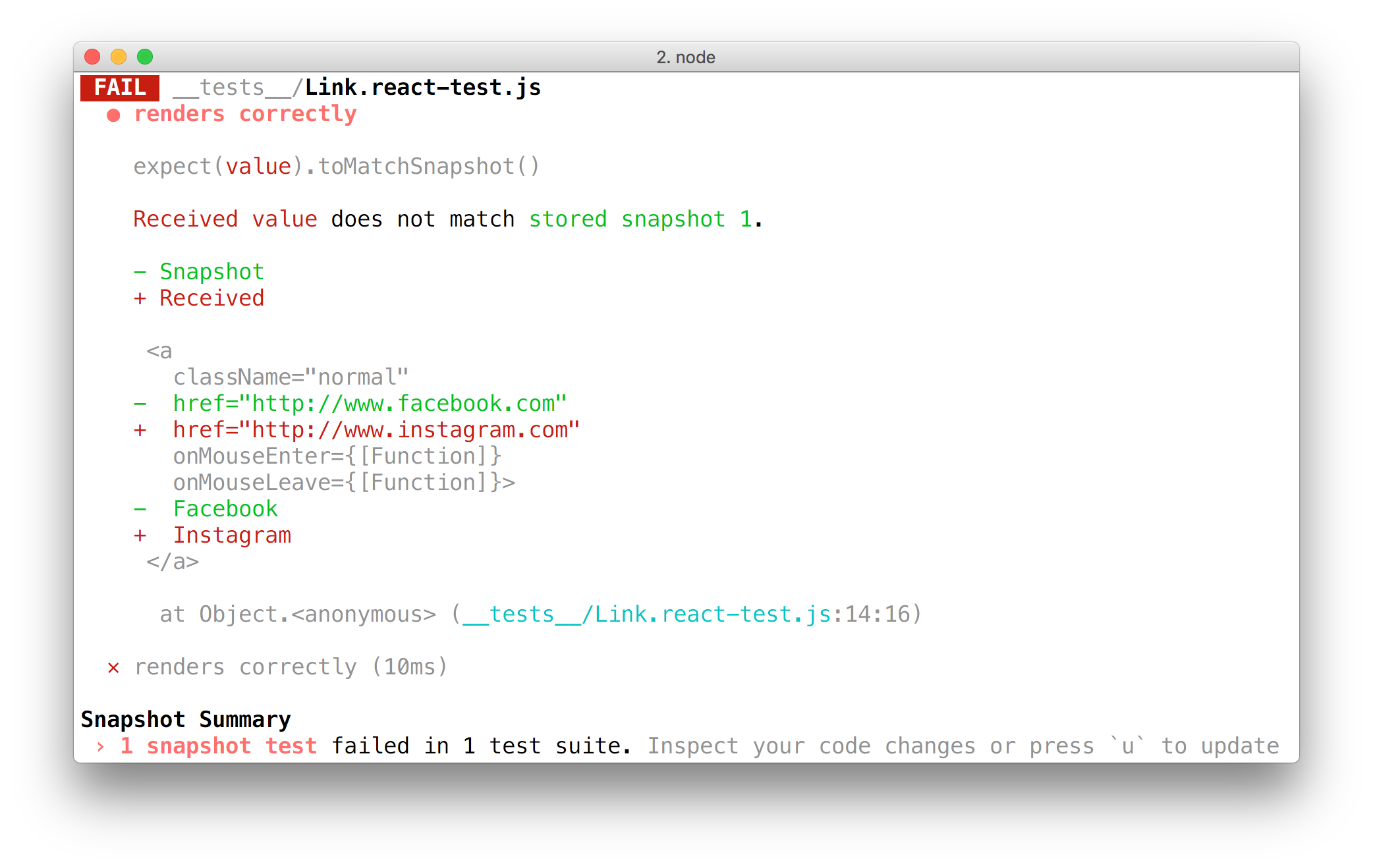

Snapshot testing means checking the consistency of the generated markup.

import React from 'react';

import Link from '../Link.react';

import renderer from 'react-test-renderer';

it('renders correctly', () => {

const tree = renderer

.create(<Link page="http://www.facebook.com">Facebook</Link>)

.toJSON();

expect(tree).toMatchSnapshot();

});A test like the following one

Snapshot testing means checking the consistency of the generated markup.

exports[`renders correctly 1`] = `

<a

className="normal"

href="http://www.facebook.com"

onMouseEnter={[Function]}

onMouseLeave={[Function]}

>

Facebook

</a>

`;Produces a snapshot file like this

Snapshot testing means checking the consistency of the generated markup.

// Updated test case with a Link to a different address

it('renders correctly', () => {

const tree = renderer

.create(<Link page="http://www.instagram.com">Instagram</Link>)

.toJSON();

expect(tree).toMatchSnapshot();

});And a change in the test

Snapshot testing means checking the consistency of the generated markup.

Makes the test fail

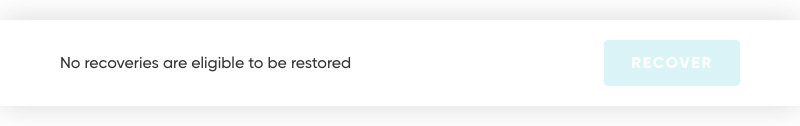

Regression testing means checking the consistency of the visual aspect of the component.

Take a look at this component

If we change its CSS

Regression testing means checking the consistency of the visual aspect of the component.

A regression test prompts us with the differences

Regression testing means checking the consistency of the visual aspect of the component.

Callback testing means checking the component respects the callback contract.

it('Should invoke the passed callback when the user clicks on it', () => {

const user = {

email: "user@conio.com",

user_id: "534583e9-9fe3-4662-9b71-ad1e7ef8e8ce"

};

const mock = jest.fn();

const { getByText } = render(

<UserListItem clickHandler={mock} user={user} />

);

fireEvent.click(getByText(user.user_id));

expect(mock).toHaveBeenCalledWith(user.user_id);

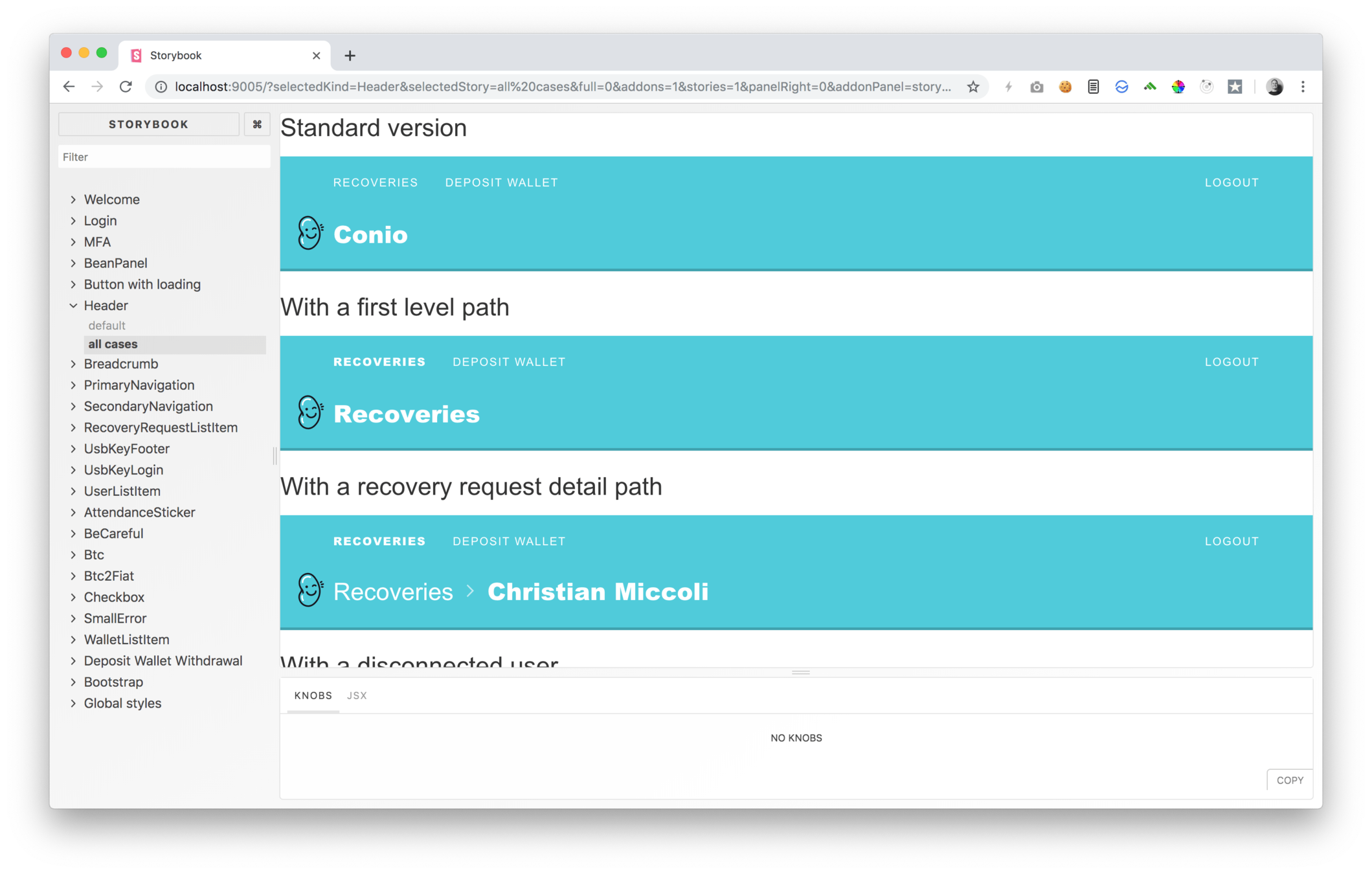

});💅 Component tests are made easy by dedicated tools like:

- Storybook (and its Storyshots add-on)

- React Testing Library (or Enzyme etc.)

Automate component tests

✅ performance

⬜️ reliability

✅ maintainability

✅ assertions quality

Otherwise: the more time we spend writing them, the higher the chance that we won't write them 😉

Once we have Storybook'ed your components...

... We can automate the snapshot tests...

... With just a line of code!

import initStoryshots from "@storybook/addon-storyshots";

initStoryshots({

storyNameRegex: /^((?!playground|demo|loading|welcome).)*$/

});

* I used the regex to filter out useless component stories, like the ones where there is an animated loading

Regression tests: it's the same 😊

import initStoryshots from "@storybook/addon-storyshots";

import { imageSnapshot } from "@storybook/addon-storyshots-puppeteer";

// regression tests (not run in CI)

// they don't work if you haven't launched storybook

if (!process.env.CI) {

initStoryshots({

storyNameRegex: /^((?!playground|demo|loading|welcome).)*$/,

suite: "Image storyshots",

test: imageSnapshot({ storybookUrl: "http://localhost:9005/" })

});

} else {

test("The regression tests won't run", () => expect(true).toBe(true));

}

* I used the regex to filter out useless component stories, like the ones where there is an animated loading

If you don't use a style-guide tool like Storybook

At least automate the component and test creation with templates.

- Blueprint for VSCode

- React templates

- etc.

🚀 With UI integration tests: we consume the real UI in a real browser with a fake server.

They are super fast because the server is stubbed/mocked, we won't face network latencies and server slowness/reliability.

I call them UI integration tests to differentiate them from the "classic" integration ones.

🚀 With UI integration tests we test the front-end.

Write a lot of UI Integration tests

✅ performance

✅ reliability

✅ maintainability

⬜️ assertions quality

Otherwise: we write a lot of E2E tests but remember that they are not practical at all 😠

🚀 UI integration tests are really fast

🚀 UI integration tests are our ace in the hole.

We can test every UI flow/state with confidence.

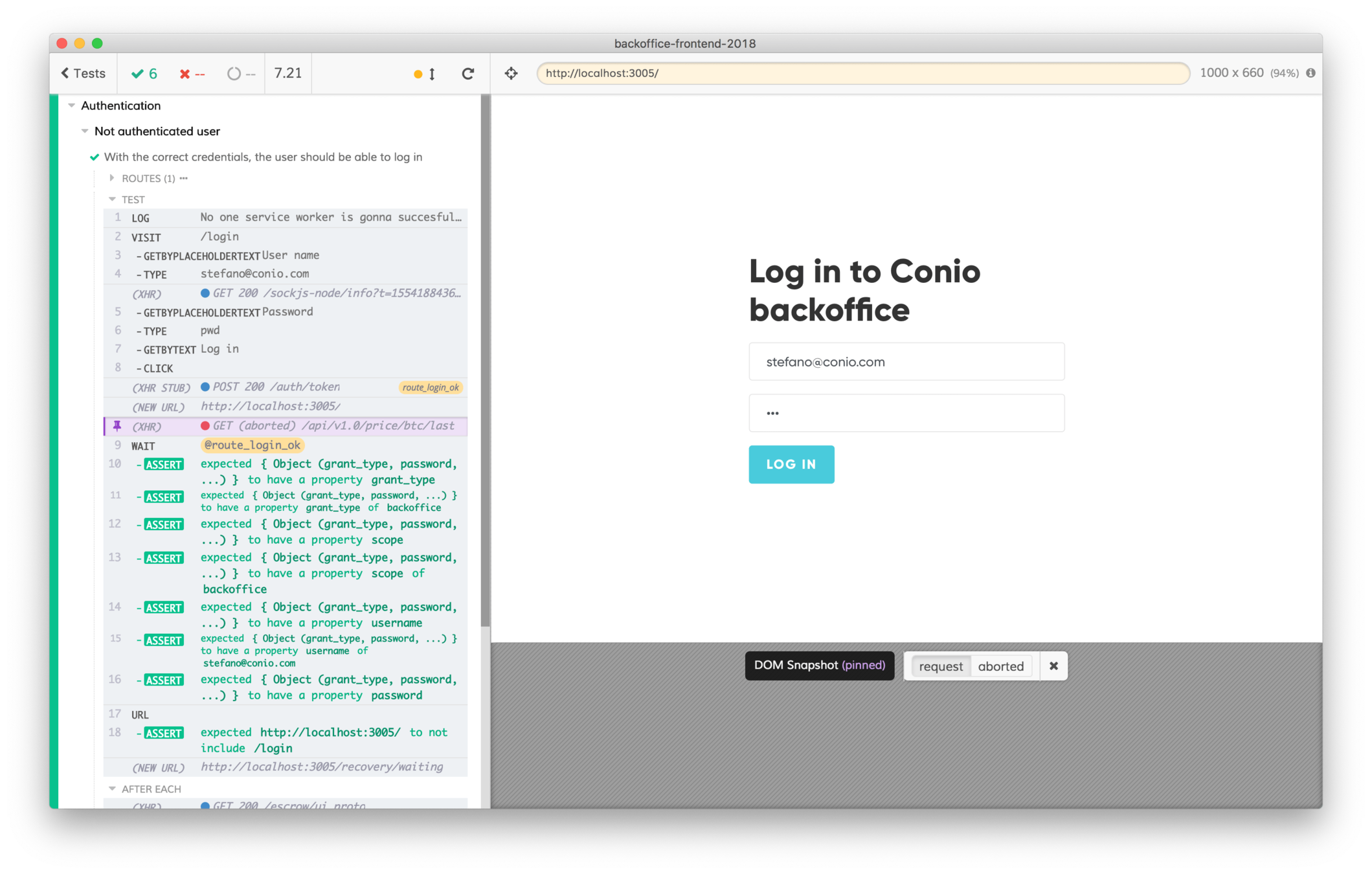

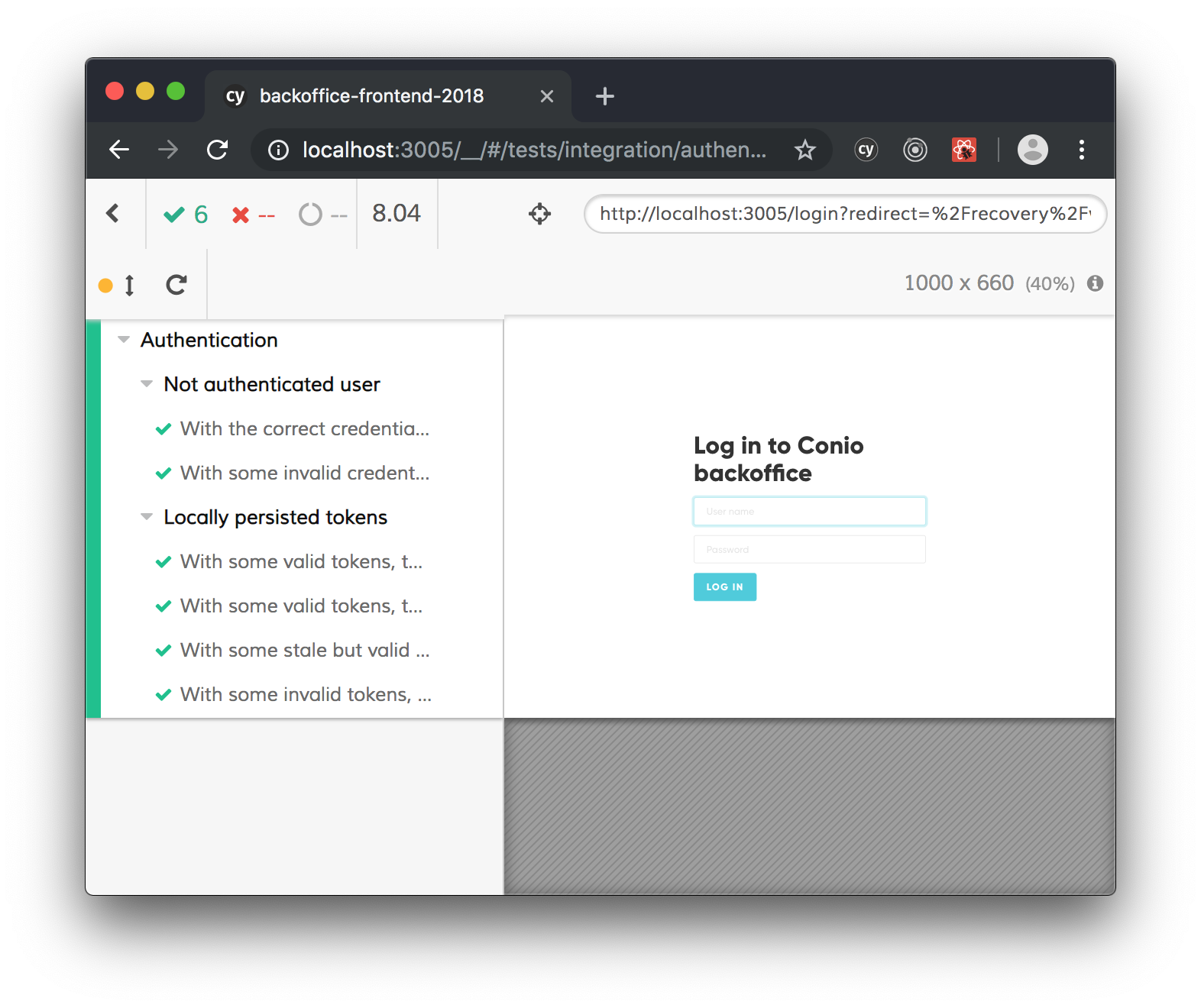

A Cypress screenshot

🌎 E2E tests: we consume the real UI in a real browser with the real server and data.

They are slow, even because we need to clear data before and after the tests.

🌎 With e2E tests we test the back-end.

Write a few E2E tests (just for the happy paths*)

✅ performance

✅ reliability

⬜️ maintainability

⬜️ assertions quality

Otherwise: our tests will be 10x slower and we make our back-enders and devOps accountable for our own tests.

* A happy path is a default/expected scenario featuring no errors.

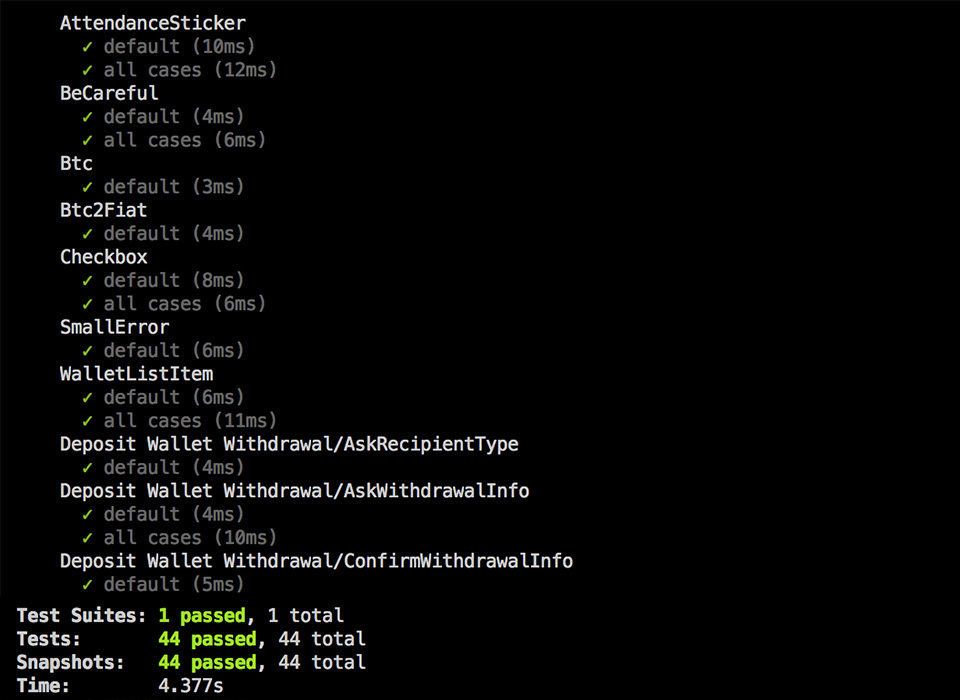

The project I'm currently working takes:

-

4" for 18 💅 callback tests

-

5" for 44 💅 snapshot tests

-

46" for 44 💅 regression tests

-

82" for 48 🚀 UI integration tests

-

183" for 11 🌎 E2E tests

A simple example: authentication.

The steps usually are the following:

Show a success feedback

Go to the login page

Write username and password

Click "Login"

Redirect to the home page

A first (to be improved) test could be:

// Cypress example

cy.visit("/login");

cy.get(".username").type(user.email);

cy.get(".password").type(user.password);

cy.get(".btn.btn-primary").click();

cy.wait(3000); // no need for that, I added it on purpose

cy.getByText("Welcome back").should("exist");

cy.url().should("be", "/home");⬜️ performance

⬜️ reliability

✅ maintainability

✅ assertions quality

Otherwise: we need to inspect the DOM frequently in case of failures (adding unique and self-explicative IDs isn't an easy task compared with looking for content in a screenshot).

Base tests on contents, not on selectors

👨💻👩💻 The more we consume your front-end as the users do, the more our tests are effective.

// Cypress example

cy.get(".username").type(user.email);

cy.get(".password").type(user.password);

cy.get(".btn.btn-primary").click();// Cypress example

cy.getByPlaceholderText("Username").type(user.email);

cy.getByPlaceholderText("Password").type(user.password);

cy.getByText("Login").click();⬜️ performance

✅ reliability

✅ maintainability

⬜️ assertions quality

Otherwise: we risk to base your tests on attributes with a different purpose, like classes or ids.

As a second choice, use data-testid attributes

🛠 A dedicated attribute is more resilient to refactoring...

// Cypress example

// The login button now has an emoticon

// cy.getByText("Login").click();

cy.getByTestId("login-button").click();<button data-testid="login-button">✅</Button>🤦♂ ... Compared to other selectors...

<button

class="btn btn-primary btn-sm"

id="ok-button">

✅

</Button>BTW, the test passes, everything is ok!

Go to the login page

Write username and password

Click "Login"

Redirect to the home page

Show a success feedback

But, after some changes/time, it fails...

What went wrong?

Go to the login page

Write username and password

Click "Login"

Redirect to the home page

Show a success feedback

😇 The front-end faults could be:

- the login button isn't dispatching the right action

- the credentials aren't passed in the action payload

- the request payload is wrong

🤬 The back-end faults could be:

- the accepted payload changed

- the response payload is wrong

- the server is down

😖 Other faults could be:

- the network is chocked

- the server is busy

🤔 There's room for too many errors, could the test be more self explanatory?

Let's refactor it!

✅ performance

✅ reliability

⬜️ maintainability

⬜️ assertions quality

Otherwise: we make our tests slower and slower.

Await, don't sleep

It always starts with...

"3 seconds sleeping are enough for an AJAX call!" 👍

⌛️ Then, at the first Wi-Fi slowness...

"I'll sleep the test for 10 seconds..." 😎

⌛️ Then, at the first server wakeup...

"... 30 seconds?" 😓

⌛️ ...

⌛️ ...

⌛️ ...

Well done, we have a 30 seconds sleeping test!

Even if our AJAX request usually lasts less than a second...

📡👀 Instead, wait for network responses

// Cypress example

cy.route({

method: "POST",

url: '/auth/token'

}).as("route_login");

cy.getByPlaceholderText("Username").type(user.email);

cy.getByPlaceholderText("Password").type(user.password);

cy.getByText("Login").click();

// it doesn't matter how long it takes

cy.wait("@route_login");

cy.getByText("Welcome back").should("exist");

cy.url().should("be", "/home");👀 Everything can be awaited

🗒👀 Wait for contents

// Cypress example

cy.route({

method: "POST",

url: '/auth/token'

}).as("route_login");

cy.getByPlaceholderText("Username").type(user.email);

cy.getByPlaceholderText("Password").type(user.password);

cy.getByText("Login").click();

// it doesn't matter how long it takes

cy.wait("@route_login");

cy.getByText("Welcome back").should("exist");

cy.url().should("be", "/home");⚛️👀 Wait for specific client state

We (me and Tommaso) have written a dedicated plugin! 🎉 Please thank the Open Source Saturday community for that, we developed it three days ago 😊

cy.waitUntil(() => cy.window().then(win => win.foo === "bar"));🚜 Waiting for some external events/data makes your tests more robust and with better hints in case of failures.

🎉 Dedicated UI testing tools embeds dynamic waitings.

For example, Cypress waits (by default, we can adjust it) up to:

- 60 seconds for a page load

- 5 seconds for a XHR request start

- 30 seconds for a XHR request end

- 4 seconds for an element to appear

😅 Ok, It was just a network issue, now the test is green

Go to the login page

Write username and password

Click "Login"

Show a success feedback

Wait for the network request

Redirect to the home page

Ten releases later... The test fails...

Go to the login page

Write username and password

Click "Login"

Show a success feedback

Wait for the network request

Redirect to the home page

Here we are again... But, wait, the test could tell us something more!

Test AJAX request and response payloads

✅ performance

✅ reliability

⬜️ maintainability

✅ assertions quality

Otherwise: we waste your time debugging our test/UI just to realize that the server payloads are changed.

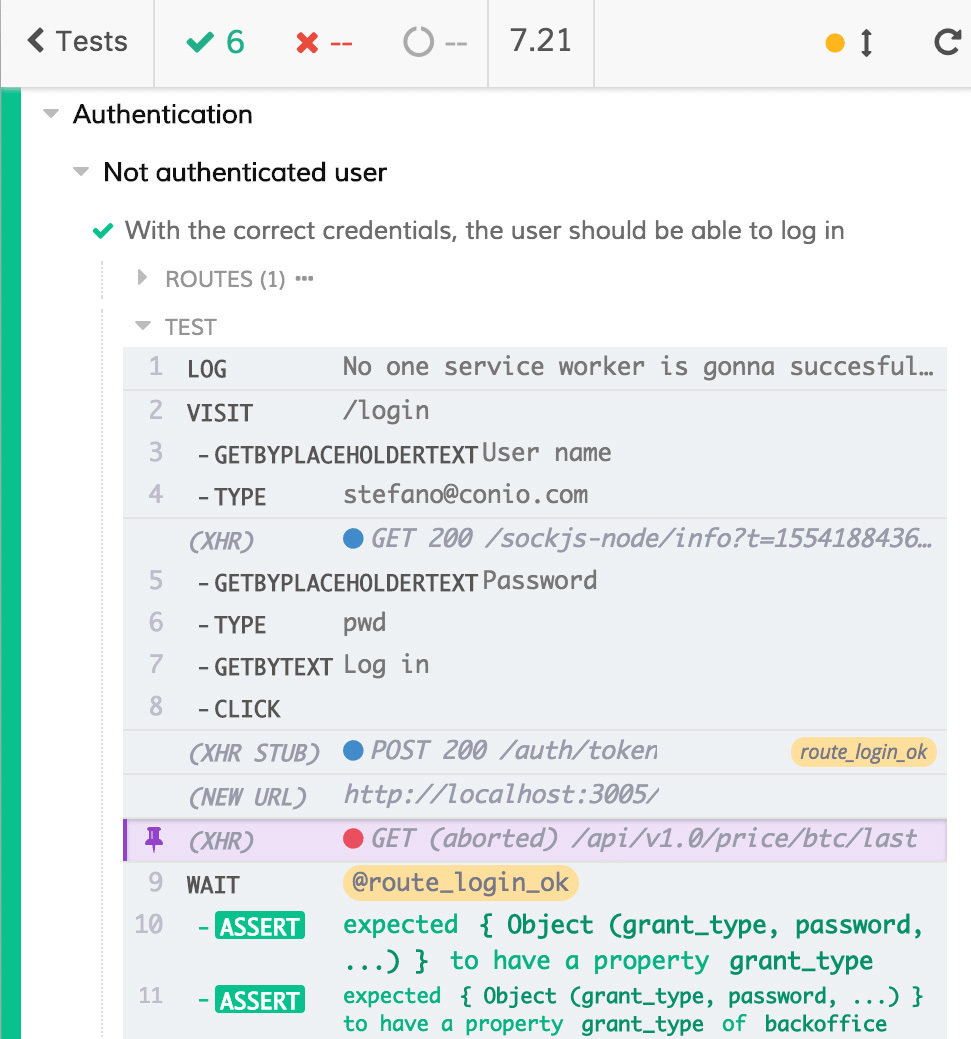

📡 ➡ 📦 Use UI integration tests to check the request payload

cy.wait("@route_login").then(xhr => {

const params = xhr.request.body;

expect(params).to.have.property("grant_type", AUTH_GRANT);

expect(params).to.have.property("scope", AUTH_SCOPE);

expect(params).to.have.property("username", user.email);

expect(params).to.have.property("password", user.password);

});📡 ⬅ 📦 Use E2E tests to check the response payload

cy.wait("@route_login").then(xhr => {

expect(xhr.status).to.equal(200);

expect(xhr.response.body).to.have.property("access_token");

expect(xhr.response.body).to.have.property("refresh_token");

});⬜️ performance

⬜️ reliability

✅ maintainability

✅ assertions quality

Otherwise: we spend more time than needed to find why a test failed.

Assert frequently

We aren't unit-testing, a user flow has lot of steps.

cy.getByPlaceholderText("Username").type(user.email);

cy.getByPlaceholderText("Password").type(user.password);

cy.getByText("Login").click();

cy.wait("@route_login").then(xhr => {

const params = xhr.request.body;

expect(params).to.have.property("grant_type", AUTH_GRANT);

expect(params).to.have.property("scope", AUTH_SCOPE);

expect(params).to.have.property("username", user.email);

expect(params).to.have.property("password", user.password);

});

cy.getByText("Welcome back").should("exist");

cy.url().should("be", "/home");⬜️ performance

⬜️ reliability

✅ maintainability

✅ assertions quality

Otherwise: we waste time relaunching the test to discover what's wrong with some data.

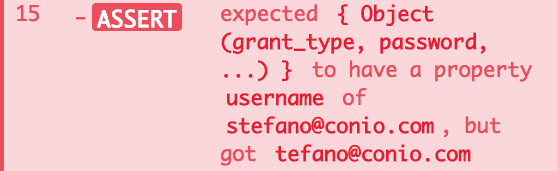

Use the right assertion

An example

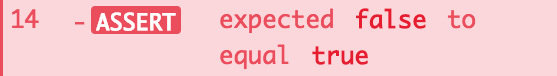

// wrong

expect(xhr.request.body.username === user.email).to.equal(true);

// right

expect(xhr.request.body).to.have.property("username", user.email);Failure feedback

Nice, we'll be notified the next time someone changes the payload!

Go to the login page

Write username and password

Click "Login"

Check both request and response payloads

Wait for the network request

Show a success feedback

Redirect to the home page

What's next? How could we improve the test again?

⬜️ performance

✅ reliability

✅ maintainability

✅ assertions quality

Otherwise: we break the tests more often than needed.

Stay in context

We're testing that the user will be redirected away after a successful login.

cy.getByText("Welcome back").should("exist");

cy.url().should("be", "/home");Changing the success redirect could make the test fail.

cy.getByText("Welcome back").should("exist");

cy.url().should("be", "/home");No more 😌

cy.getByText("Welcome back").should("exist");

cy.url().should("not.include", "/login");⬜️ performance

✅ reliability

✅ maintainability

✅ assertions quality

Otherwise: we break the tests with minor changes.

Share constants and utilities with the app

What happens if the login route becomes "/authenticate"? 😖

cy.getByText("Welcome back").should("exist");

cy.url().should("not.include", "/login");Now it isn't a problem anymore!

import { LOGIN } from "@/navigation/routes";

// ...

cy.url().should("not.include", LOGIN);⬜️ performance

✅ reliability

✅ maintainability

✅ assertions quality

Otherwise: we are nagged by failing tests every time we make minor changes to your app.

Avoid testing implementation details

He told me to assert frequently... I wanna check the Redux state too!

Go to the login page

Write username and password

Click "Login"

Check both request and response payloads

Wait for the network request

Check the Redux state

Redirect to the home page

Show a success feedback

Check the Redux state

Avoid it! The Redux state isn't relevant!

🧨 What happens if you refactor the state? The test fails even if the app works!

// Cypress example

cy.window().then((win: any) => {

const appState = win.globalReactApp.store.getState();

expect(appState.auth.usernme).to.be(user.email);

});⬜️ performance

✅ reliability

✅ maintainability

⬜️ assertions quality

Otherwise: we lose the type guard safety while writing tests.

If the app is based on TypeScript, use it while testing too

Tests are, after all, just little scripts. Using Typescript with them warns you promptly when the types change.

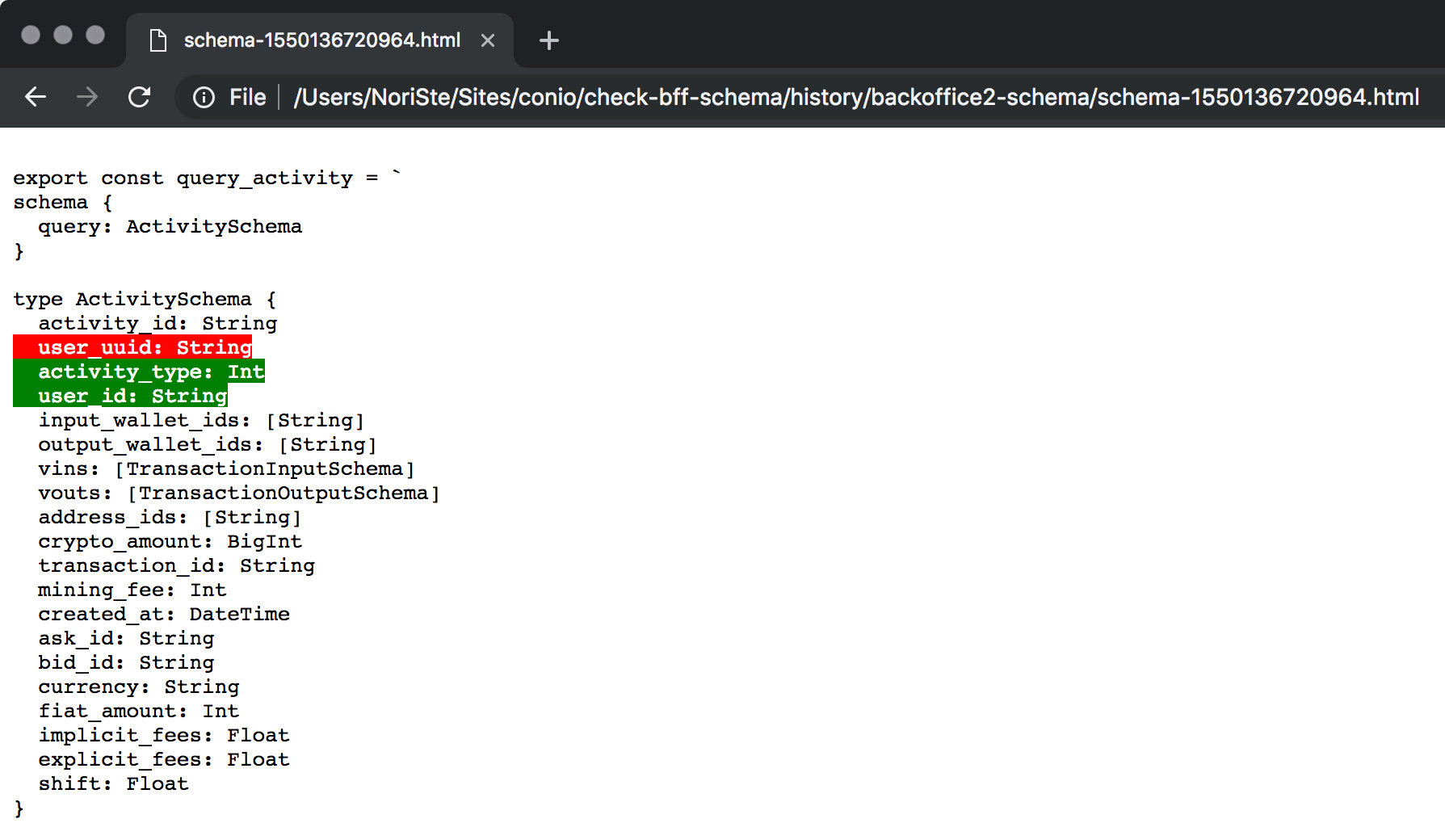

Look at every change of the server schema

⬜️ performance

✅ reliability

✅ maintainability

✅ assertion balance

Otherwise: we will debug someone else's faults or miss any important update that can affect our front-end.

To keep the schema checked you can use:

- GIT

- Postman

I developed a small NPM package too

That highlights the changes in a text document

⬜️ performance

✅ reliability

⬜️ maintainability

⬜️ assertion balance

Otherwise: we block the back-enders from running them and check themselves they haven't broken anything.

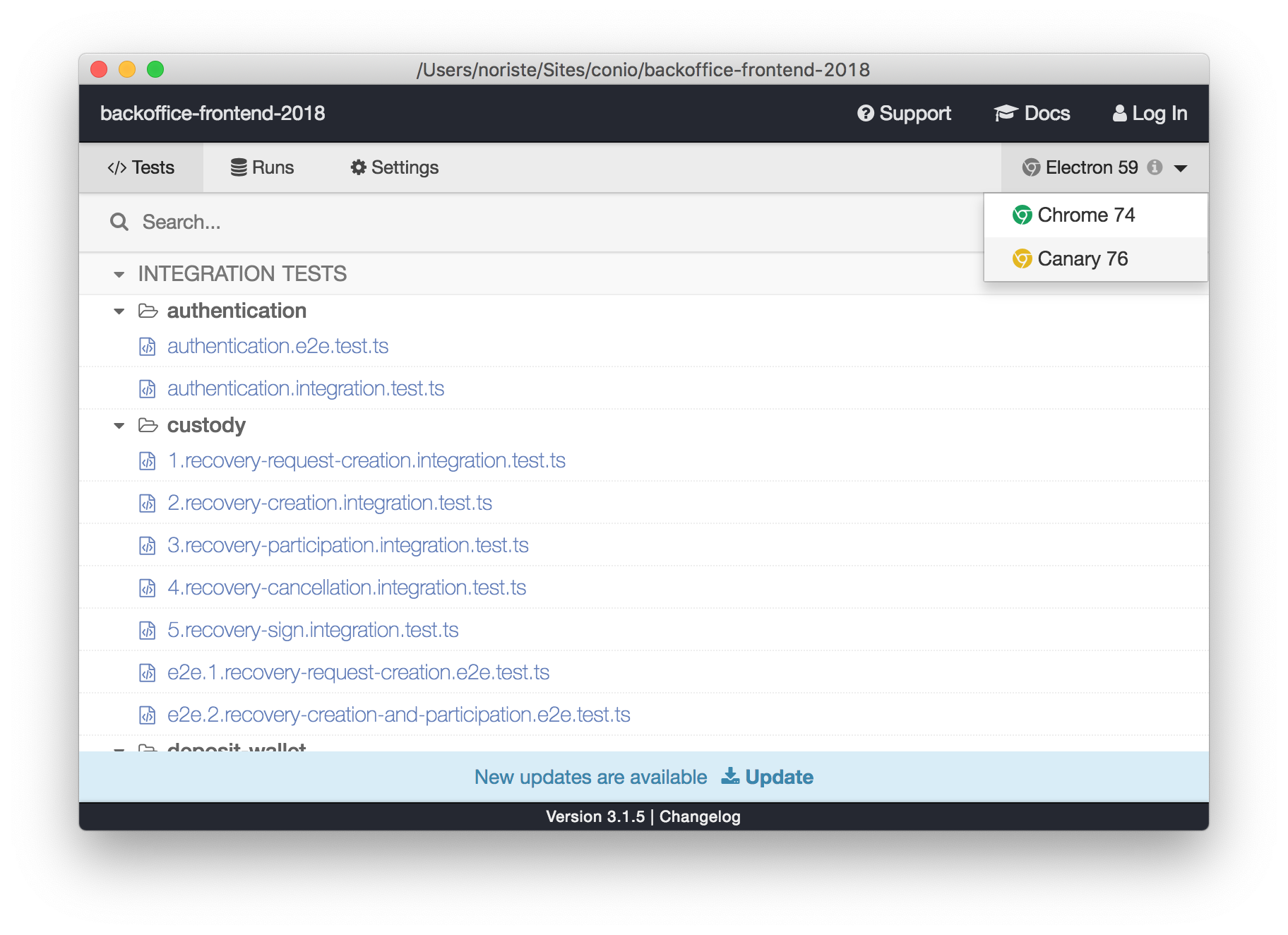

Allow to run the E2E tests only

Add a dedicated NPM script

"scripts": {

"test:e2eonly": "CYPRESS_TESTS=e2e npm-run-all build:staging test:cypress"

}✅ performance

✅ reliability

⬜️ maintainability

⬜️ assertion balance

Otherwise: we won't leverage the power of an automated tool.

Consider your testing tool a development tool

Usually, we

Add the username input field ⌨️

Try it in the browser 🕹

Add the password input field ⌨️

Try it in the browser 🕹

Add the submit button ⌨️ ➡️ 🕹

Add the XHR request ⌨️ ➡️ 🕹

Manage the error case ⌨️ ➡️ 🕹

Check we didn't break anything 🕹🕹🕹🕹

Fix it ⌨️ ⌨️ ⌨️ ➡️ 🕹🕹🕹🕹

Check again 🕹🕹🕹🕹

Fix it ⌨️ ➡️ 🕹🕹🕹🕹

Then, when everything works, we write the tests 😓

Fill the username input field 🤖

Fill the password input field 🤖

Click the submit button 🤖

Check the XHR request/response 🤖

Check the feedback 🤖

Re-do it for every error flow 🤖

Could this flow be improved? 🤔

Yep, we could:

Add the username input field ⌨️

Make Cypress fill the username input field 🤖

Add the password input field ⌨️

Make Cypress fill the password input field 🤖

Add the submit button ⌨️ ➡️ 🤖

Add the XHR request ⌨️ ➡️ 🤖

Manage the error case ⌨️ ➡️ 🤖

Check we didn't break anything

Fix it

But, mostly, we don't need to write the tests 🎉

How can we debug our app while we're developing and testing it alongside?

Cypress allows us to use a "dedicated" browser...

... Where we can install custom extensions too

Summing up

-

tests mustn't fail for minor app updates

-

if they fail, they must drive us directly to the issue

-

the less time we spend writing tests, the more we love them

Recommended sources

• https://www.blazemeter.com/blog/top-15-ui-test-automation-best-practices-you-should-follow • https://medium.freecodecamp.org/why-end-to-end-testing-is-important-for-your-team-cb7eb0ec1504 • https://blog.kentcdodds.com/write-tests-not-too-many-mostly-integration-5e8c7fff591c • https://hackernoon.com/testing-your-frontend-code-part-iii-e2e-testing-e9261b56475 • https://gojko.net/2010/04/13/how-to-implement-ui-testing-without-shooting-yourself-in-the-foot-2/ • https://willowtreeapps.com/ideas/how-to-get-the-most-out-of-ui-testing • http://www.softwaretestingmagazine.com/knowledge/graphical-user-interface-gui-testing-best-practices/ • https://www.slideshare.net/Codemotion/codemotion-webinar-protractor • https://frontendmasters.com/courses/testing-javascript/introducing-end-to-end-testing/ • https://medium.com/welldone-software/an-overview-of-javascript-testing-in-2018-f68950900bc3 • https://medium.com/yld-engineering-blog/evaluating-cypress-and-testcafe-for-end-to-end-testing-fcd0303d2103

Extensive sources list

❤️ For the early feedbacks, thanks to

Thank you!

🙏 Please, give me any kind of feedback 😊