Node.js in production with resounding volume traffic

Manuel Kanah

Tech Lead

at Yumpingo Ltd

http://yumpingo.com/

e-mail: manuel@kanah.it

Twitter: @testinaweb

Github: testinaweb

Blog: http://www.labna.it

VCard

Node.js

Node.js is a JavaScript runtime built on Chrome's V8 JavaScript engine. Node.js uses an event-driven, non-blocking I/O model that makes it lightweight and efficient.

Node.js operates on a single thread, using non-blocking I/O calls, allowing it to support tens of thousands of concurrent connections without incurring the cost of thread context switching. The design of sharing a single thread between all the requests that uses the observer pattern is intended for building highly concurrent applications, where any function performing I/O must use a callback. In order to accommodate the single-threaded event loop, Node.js utilizes the libuv library that in turn uses a fixed-sized threadpool that is responsible for some of the non-blocking asynchronous I/O operations

Single-threaded

A downside of this single-threaded approach is that Node.js doesn't allow vertical scaling by increasing the number of CPU cores of the machine it is running on without using an additional module, such as cluster, StrongLoop Process Manager or pm2. However, developers can increase the default number of threads in the libuv threadpool; these threads are likely to be distributed across multiple cores by the server operating system.

DSP

A demand-side platform (DSP) is a system that allows buyers of digital advertising inventory to manage multiple ad exchange and data exchange accounts through one interface.

Mongodb data structure

> db.dsp.findOne({_id: "constrain"})

{

"_id": "constrain",

"c": [

"123",

"456",

"789"

]

}The data structure allows to the dsp to work with arrays and to get the campaigns from the intersections of the arrays. The campaigns that will remain in the final array will fill the response.

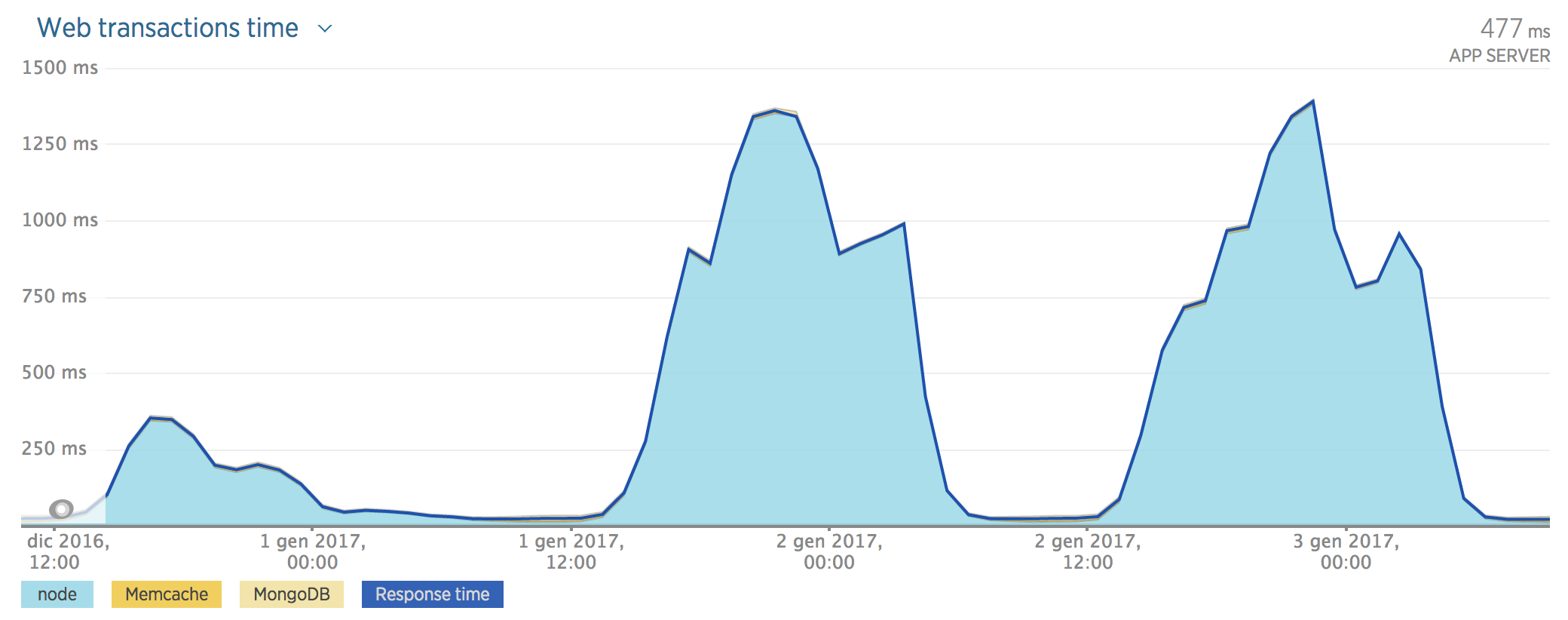

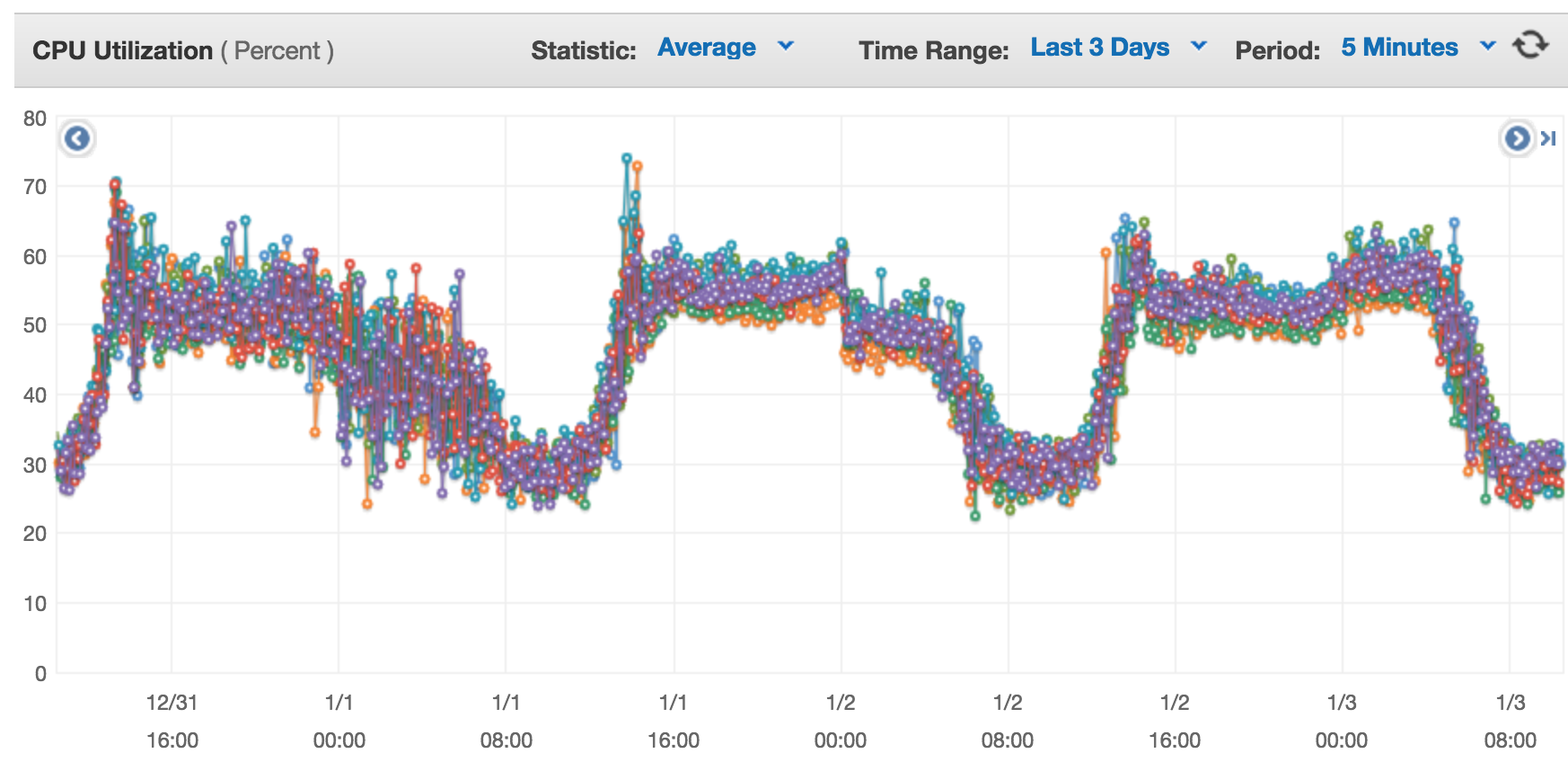

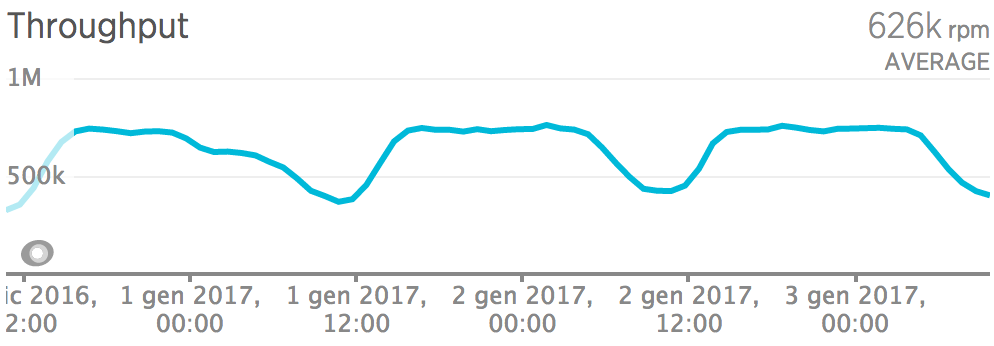

Performance degradation

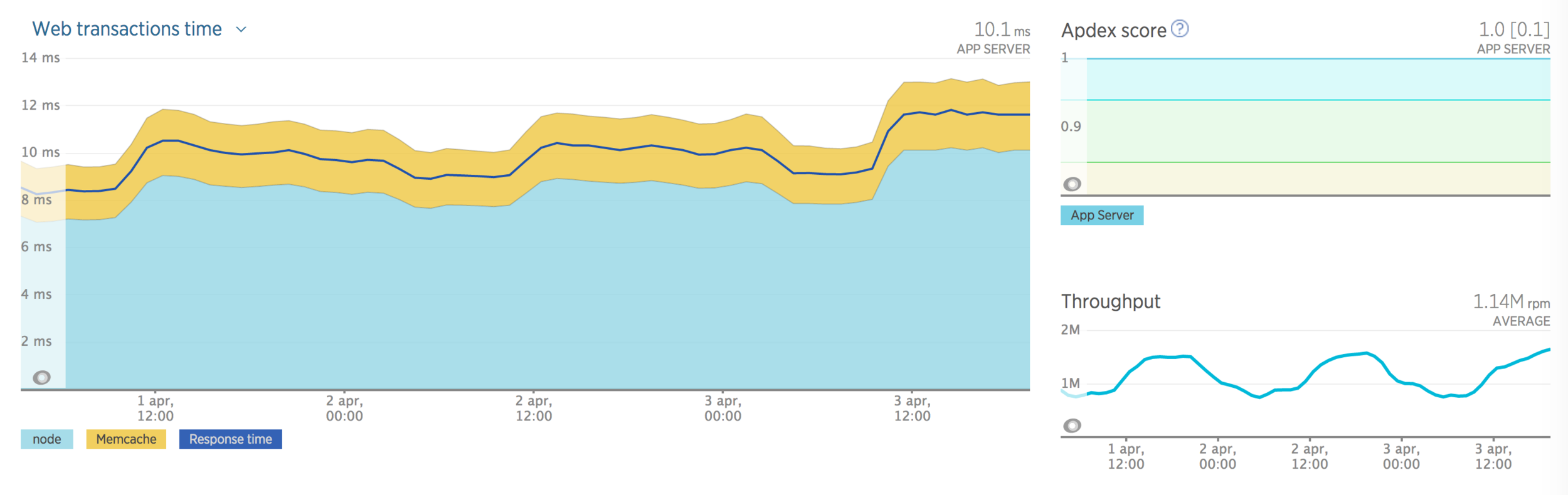

Throughput

CPU Utilization

Execution time degradation:

from 10ms to over 1250ms

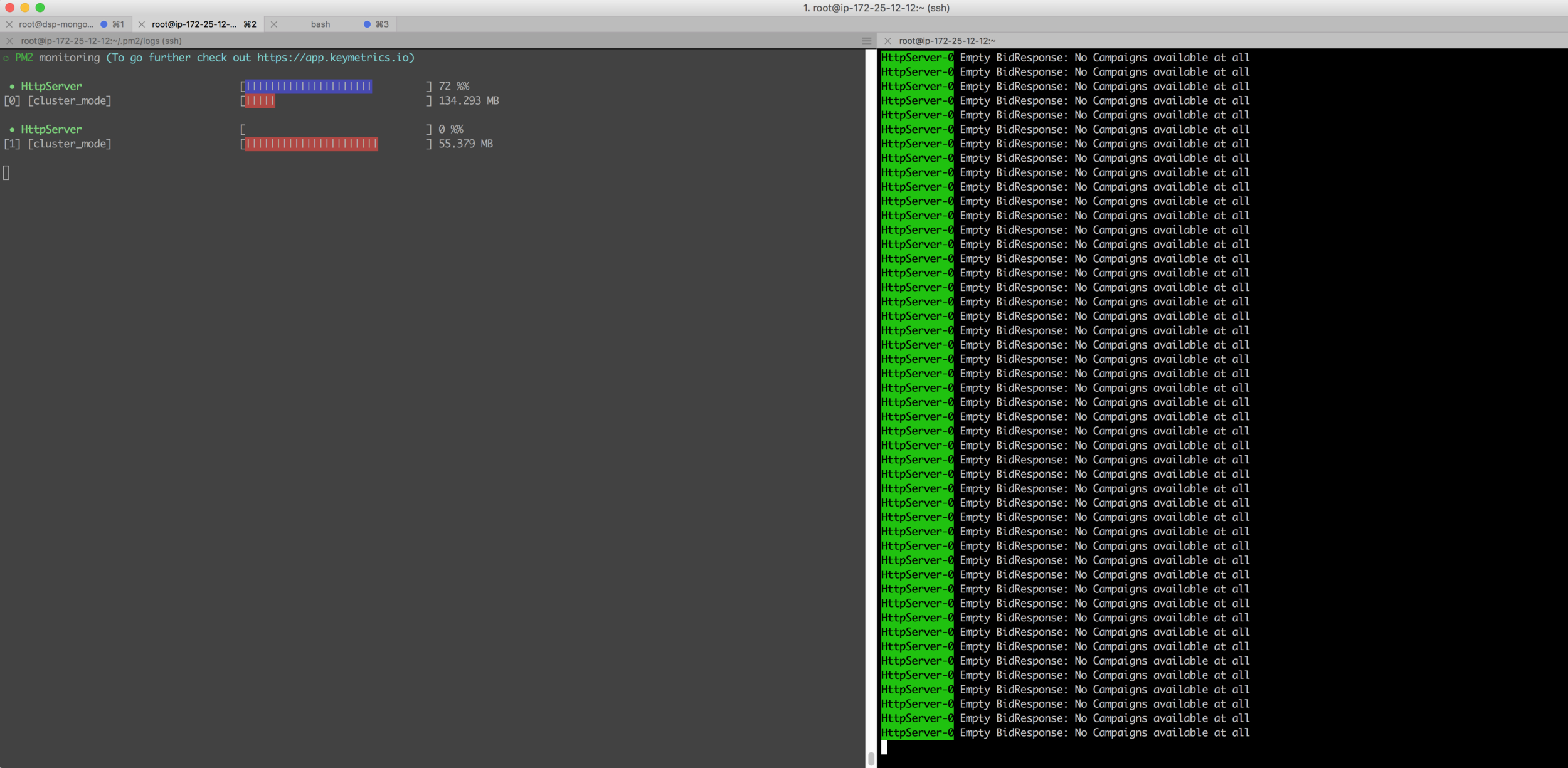

PM2

Advanced, production process manager for Node.js

apps:

- script : app/index.js

instances: 0 # pm2 uses a process per core

exec_mode: cluster$ npm install pm2 -g$ pm2 start process.ymlhttp://pm2.keymetrics.io/

PM2 cluster configuration

PM2 Cluster uses a core as any other node.js process

$ pm2 monit

Haproxy

HAProxy is a free, very fast and reliable solution offering high availability, load balancing, and proxying for TCP and HTTP-based applications. It is particularly suited for very high traffic web sites and powers quite a number of the world's most visited ones.

http://www.haproxy.org/

ab test

# ab -n 100000 -c 200 http://localhost/

PM2:

Time taken for tests: 38.352 seconds

Requests per second: 2607.40 [#/sec] (mean)

Time per request: 76.705 [ms] (mean)

Time per request: 0.384 [ms] (mean, across all concurrent requests)

Transfer rate: 295.37 [Kbytes/sec] received

HAproxy:

Time taken for tests: 24.791 seconds

Requests per second: 4033.71 [#/sec] (mean)

Time per request: 49.582 [ms] (mean)

Time per request: 0.248 [ms] (mean, across all concurrent requests)

Transfer rate: 464.82 [Kbytes/sec] received

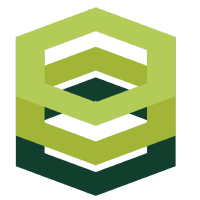

CPU heap

RisingStack

CPU profiles snapshot

https://trace.risingstack.com/

Libuv

libuv is a multi-platform support library with a focus on asynchronous I/O.

Features

- Full-featured event loop backed by epoll, kqueue, IOCP, event ports.

- Asynchronous TCP and UDP sockets

- Asynchronous DNS resolution

- Asynchronous file and file system operations

- File system events

- ANSI escape code controlled TTY

- IPC with socket sharing, using Unix domain sockets or named pipes

- Child processes

- Thread pool

- Signal handling

- High resolution clock

- Threading and synchronization primitives

http://libuv.org/

How to Libuv

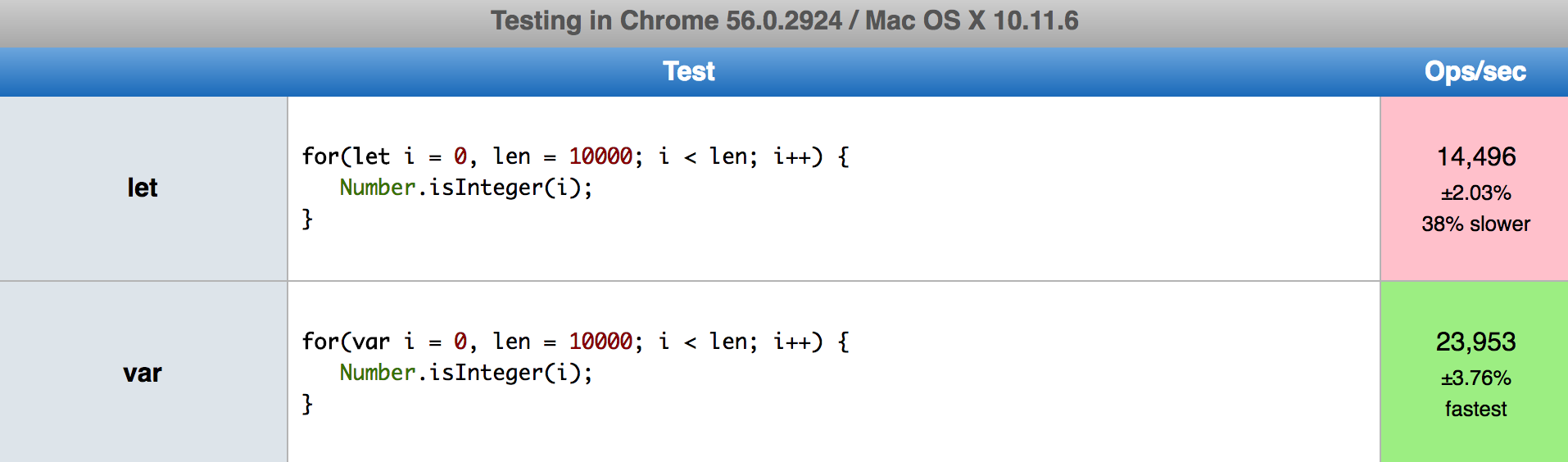

process.env.UV_THREADPOOL_SIZE = 100;Var vs let

The difference is scoping. var is scoped to the nearest function block and let is scoped to the nearest enclosing block.

Variables declared with let are not accessible before they are declared in their enclosing block.

https://jsperf.com/var-vs-let-performance

max-inlined-source-size

function add(x, y) {

// Addition is one of the four elementary,

// mathematical operation of arithmetic, with the other being subtractions,

// multiplications and divisions. The addition of two whole numbers is the total

// amount of those quantitiy combined. For example in the picture on the right,

// there is a combination of three apples and two apples together making a total

// of 5 apples. This observation is equivalent to the mathematical expression

// "3 + 2 = 5"

// Besides counting fruit, addition can also represent combining other physical object.

return(x + y);

}function add(x, y) {

// Addition is one of the four elementary,

// mathematical operation of arithmetic with the other being subtraction,

// multiplication and division. The addition of two whole numbers is the total

// amount of those quantitiy combined. For example in the picture on the right,

// there is a combination of three apples and two apples together making a total

// of 5 apples. This observation is equivalent to the mathematical expression

// "3 + 2 = 5"

// Besides counting fruit, addition can also represent combining other physical object.

return(x + y);

}for(let i = 0; i < 500000000; i++) {

if (add(i, i++) < 5) {

//

}

}max-inlined-source-size

$ node --max-inlined-source-size=1000 index.js$ node -v

v6.9.4

$ time node moreThan600Chars.js # with “let”

real 0m2.119s

user 0m2.108s

sys 0m0.012s

$ time node lessThan600Chars.js # with “let”

real 0m0.800s

user 0m0.796s

sys 0m0.000s

$ time node moreThan600Chars.js # with “var”

real 0m1.557s

user 0m1.552s

sys 0m0.000s

$ time node lessThan600Chars.js # with “var”

real 0m0.322s

user 0m0.296s

sys 0m0.008s

WTF!

Lodash

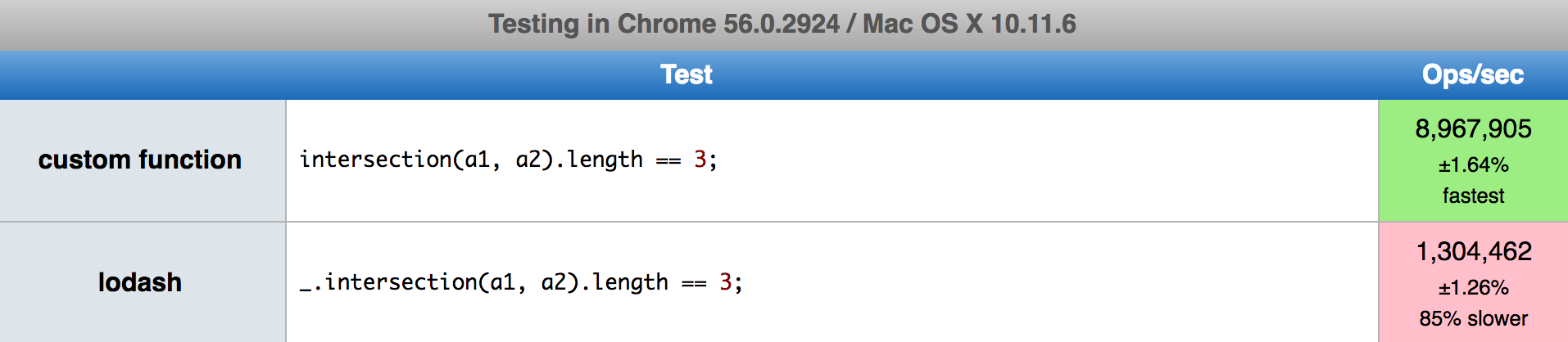

https://jsperf.com/intersection-benchmark

Lodash (as Underscore) is a very power library if you will work on front end. It does its job great and it is cross browser. On fast back end it adds a lot of computation without benefits.

Garbage Collection

If you do not have memory leaks you can use manual garbage collection. This solution will avoid garbage collector to start every moment to do its job even if you do not have anything to collect.

$ node --expose-gc index.jssetInterval(global.gc, 30000);Result

{

"apps": [

{

"name": "http01",

"args": "5001",

"script": "app/index.js",

"node_args": [

"--nouse-idle-notification", # noticed reduction in pauses since GC was getting invoked

"--expose-gc", # to manually trigger gc() method

"--max-old-space-size=2048", # to increase space size for old generation objects

"--max-inlined-source-size=1000" # to increase the inlined source size

]

}

]

}

http://slides.com/testinaweb/nodejs/fullscreen

Node.js in production with resounding volume traffic

By Manuel Kanah

Node.js in production with resounding volume traffic

The talk will be about issues related to a single node js endpoint to serve an average of 2M rpm: tools, tricks, architecture, data structure

- 866